There’s maybe a few things happening here.

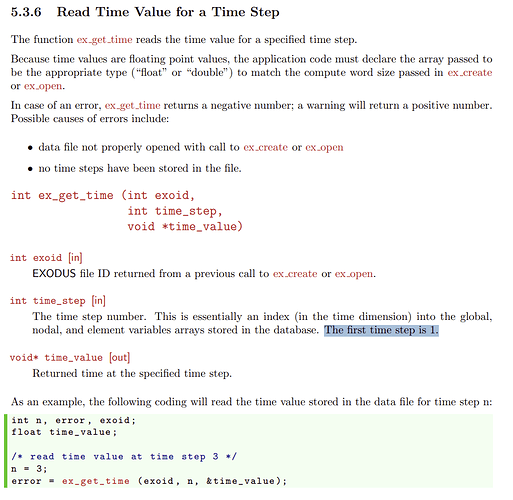

The variable testVar that you’re using for the scaling appears to be an element-variable. From the documentation:

If an element-based variable is used for the sizing function each node is assigned a value that is the average of variables on all connected elements. Nodal variables are used directly.

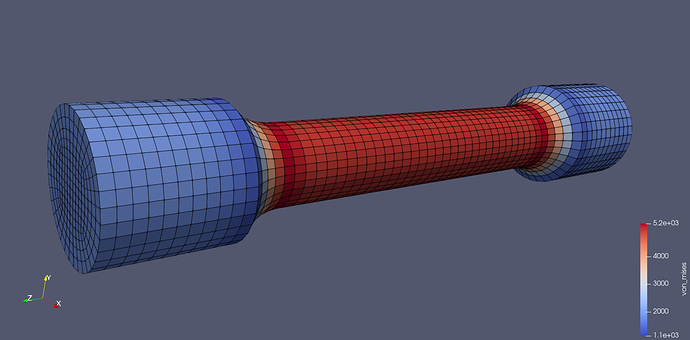

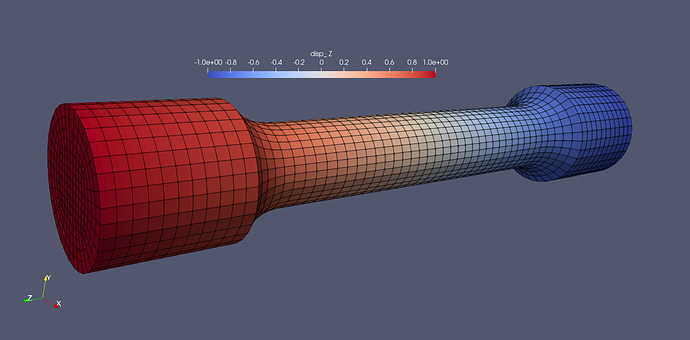

Looking at the exodus file in ParaView, with a clip filter applied, my guess is that the averaging step is “rounding up” a lot of the values.

I used the CellDataToPointData filter as a poor-mans approach to getting nodal variables instead, and then did Save Data to a new exodus file.

Note that if you use the ParaView-generated exodus file, the blocks get renumbered (for me anyways) to blocks 10 and 92 for some unknown reason… the best thing for you to do is to request your solver to output the element data as nodal data. I assume you’re using MOOSE? If so, I believe you’ll want to use ProjectionAux to do this.

Also, note that the actual data-range of the tet-meshed blocks is [0.0008263886326796536, 0.003]. However, since you used block all in the import sizing function command it will grab the min/max values across all blocks, which is [0, 0.003]. What this means is that, when you define the min_size value in the sizing function exodus command, that a value of 0.0 will map to the size value of 0.0008, while the minimum value within the tet-meshed block regions will map to a value of 0.0014 – making your effective sizing range: [0.0014, 0.003]. Instead you’ll want to use block 1 2 (or block 10 92 if using the ParaView approach).

With the above two changes (using ParaView approach) I now have:

reset

open "microstrip_coarse.cub5"

reset vol all

vol all scheme tetmesh

import sizing function "microstrip_coarse_nodal.e" block 10 92 variable "testVar" step 2

volume 1 2 sizing function exodus min_size 0.0008 max_size 0.003

mesh vol 1 2

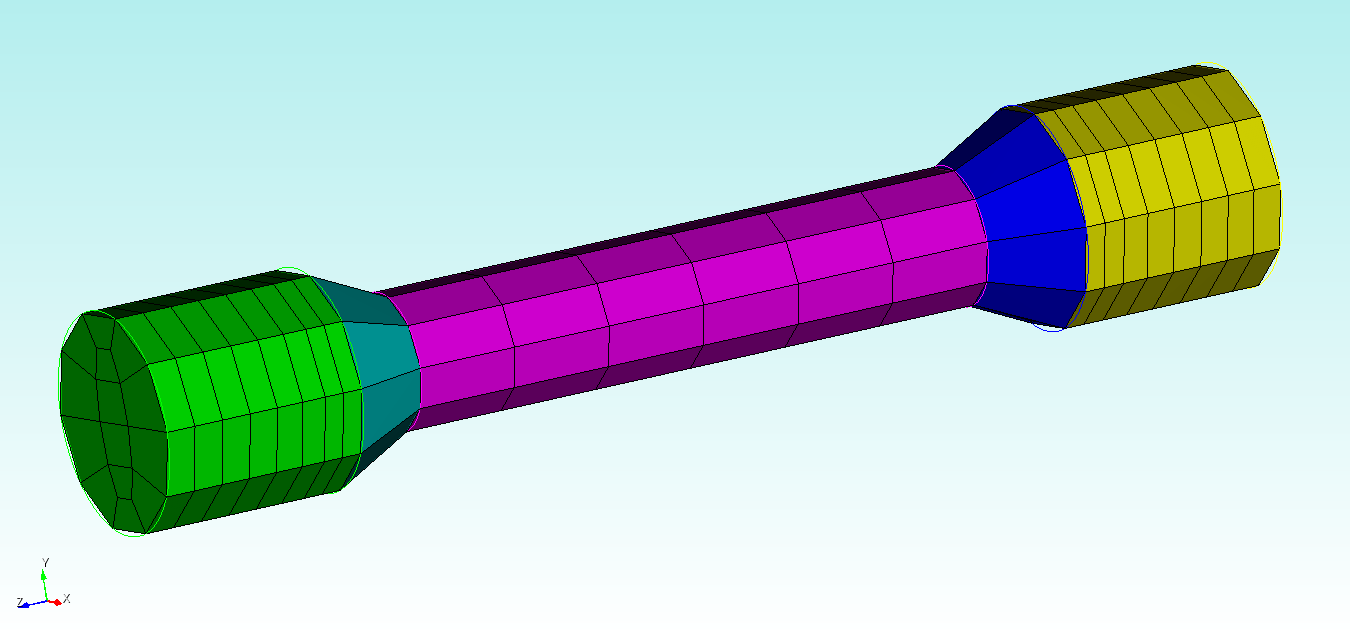

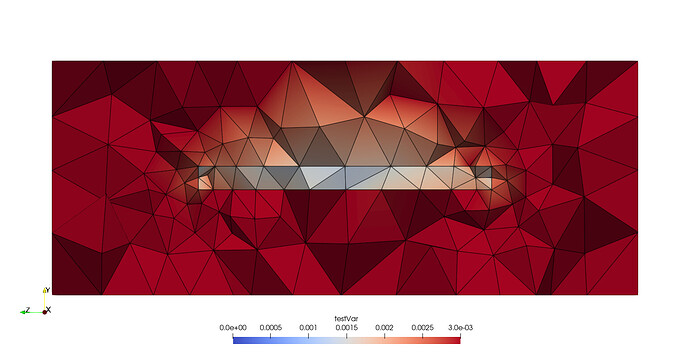

Which still results in a pretty-coarse mesh:

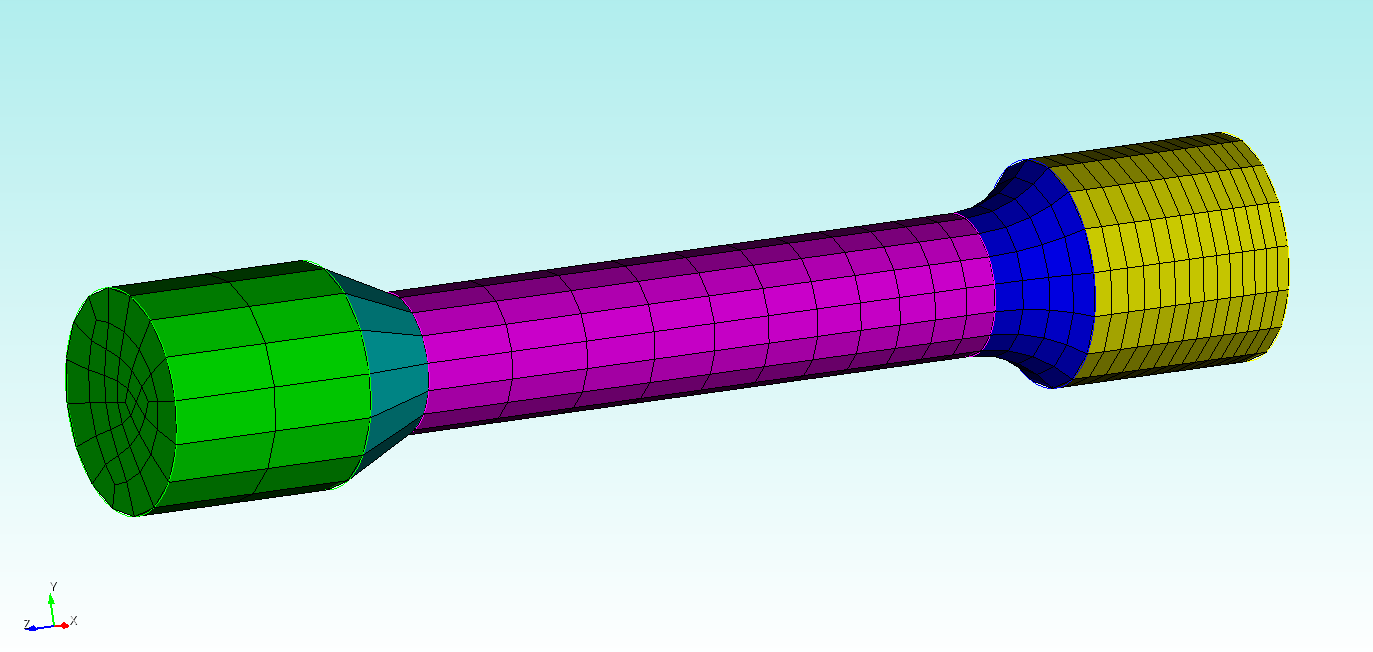

This is likely due to the relatively large region that will have a large mesh size (0.003) and the relatively small region that has a mesh size that is still ~1/3 the size of the coarse mesh. Arguably, this is exactly what you asked for as the element lengths on the top of the yellow are ~0.003 and in the green volume are ~0.001. If you want a starker contrast, try decreasing the min_size to {0.0008/10}:

And if you want the mesh to be finer than that, you could either decrease the the min_size and max_size together, here’s [{0.0008/10}, {0.003/10}]):

Or by using the optional argument Scale_Mesh_Multiplier <value>. Here are two examples:

volume 1 2 sizing function exodus min_size {0.0008/1} max_size {0.003/1} Scale_Mesh_Multiplier 10

volume 1 2 sizing function exodus min_size {0.0008/10} max_size {0.003/1} Scale_Mesh_Multiplier 10

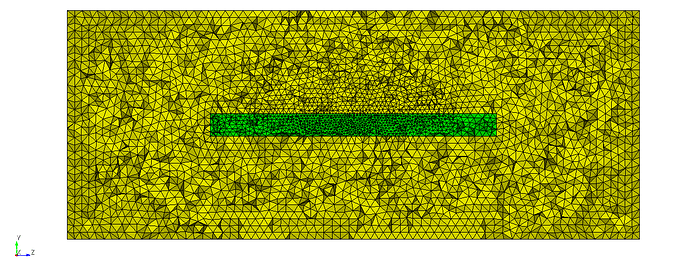

I’m partial to the final mesh, so here’s the script (again, using the ParaView-generated exodus file):

reset

open "microstrip_coarse.cub5"

reset vol all

vol all scheme tetmesh

import sizing function "microstrip_coarse_nodal.e" block 10 92 variable "testVar" step 2

volume 1 2 sizing function exodus min_size {0.0008/10} max_size {0.003/1} Scale_Mesh_Multiplier 10

mesh vol 1 2